Down the rabbit hole of controlling depth perception in 3D VR video with AI stereo baseline modification

Dear reader, I hope you will enjoy this journey down the rabbit hole of 3D video, perception of size and scale in VR, and how we can modify it for comfort or artistic effect, using computer vision software (or complex optics and lenses).

3D video creates a feeling of depth and immersion for viewers by showing a different image for the left and right eye. These images are captured by two separate cameras or lenses, which are some distance apart. The distance between the center of the lenses is called the “baseline”, or “interpupilary distance” (IPD). This concept applies both to VR video such as VR180, and to rectangular 3D video (rectilinear). With VR180 video, to create a realistic perception of scale, the two cameras need to be the same distance apart as the viewer’s eyes. Median IPD for humans is about 6.4cm. Many high end VR180 cameras don’t have exactly 6.4cm baseline, which results in the videos feeling slightly off, like things are a little bit too big or too small, or too close or too far away. For example, one of the most popular high-end VR180 cameras, the Canon R5 with dual fisheye lens, has its lenses 6cm apart, which causes the 3D to feel a little bit off in scale. To fix this, some filmmakers have taken apart their dual fisheye lenses and modified them (a sweat-inducing undertaking for a $1,799 lens). Now there is another option- use software to adjust IPD in the video after recording. We just launched this experimental feature in UpscaleVideo.ai 1.09, and in this article, I’m going to explain more about why this is a powerful tool for 3D filmmakers, and explore some other interesting examples of 3D filmmaking that bend the rules of size and scale.

Consider the video below, which shows the result of adjusting IPD in a VR180 photo captured with a Canon R5 with dual fisheye lens (using software, we didn’t take our lens apart). The video shows what would happen if the IPD were between 0.5x and 1.5x its original value (from 3cm to 9cm). In other words, the video shows what it would look like if we moved the two lenses closer or farther apart.

My name is Forrest Briggs, Ph.d., and I am the founder of Lifecast.ai. We make UpscaleVideo.ai, a tool for Windows and Mac that does super resolution, de-noising and de-blurring. We also make software for volumetric video and neural radiance fields.

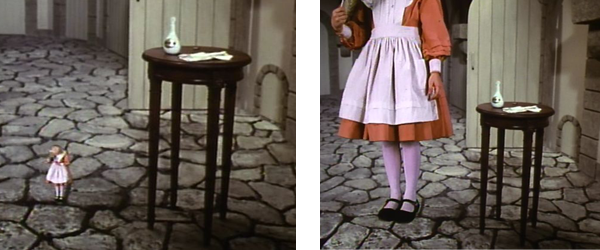

10 years ago, I was writing open source software for stitching 3D VR video at Facebook, then I worked on the perception systems for self driving cars at Lyft and human-sized robots at Google X. One of the weirdest experiences I had in my early days of hacking on 3D VR photos at Facebook made me feel like Alice in Wonderland. I took some photos of my apartment with a couple of GoPros and wrote some software to stitch them into a VR photo which I viewed on GearVR (a state of the art device at the time), but the cameras were too close together and there was a bug in my code, so it felt like being in my apartment, only I was tiny and everything was mirrored.

I have spent a lot of time over the past decade thinking about how to make 3D/VR video better, and talking with some of the best immersive filmmakers in the industry. Generating images from the point of view of a simulated camera that is moved relative to a real camera is one my specialties (this is called “novel view synthesis” or “view interpolation” in literature). We have a Canon R5 at Lifecast for testing, so I am familiar with how much the dual fisheye lens costs. So when I heard people are taking them apart and modifying them to fix the IPD so it is 6.4cm, I started thinking maybe there is a better way- can we do this in software? The answer is yes. Great, now what can we do with this and why does it matter?

We can film 3D content with simpler camera systems, then modify the baseline after the fact using software, to get better comfort, realism, or artistic effect.

Getting the distance between cameras right isn’t only a problem for VR video. James Cameron used a custom camera with beam splitter optics to film Avatar 2. One of the challenges they faced is that to get cinematic image quality you need big image sensors and lenses, and two of these cannot occupy the same space at once, hence there is a limit to how close together they can be, without advanced optics like beam splitters.

Roughly speaking, moving the cameras farther apart makes the 3D scene look smaller (like you are a giant who’s eyes are far apart, looking at a miniature scene), and moving the cameras closer together makes the scene appear as it would to an ant, everything is huge and far away. There is a lot of freedom to vary stereo baseline for artistic effect in traditional 3D video that is viewed in theaters with 3D glasses, while in VR video the goal is usually to get as close as possible to reality.

For example, if you see a 3D movie in theaters in outer space and you can see the spherical 3D shape of a planet, that is not a realistic baseline, but it might be a cooler effect.

There’s something that’s been bothering me about VR video. When you watch a traditional 2D film, there are lots of close up shots of people’s faces during dialog (sometimes after an establishing shot), which is an important artistic tool for bringing focus to emotion. But in VR video, closeup shots are virtually non-existent. Everything is at the same scale (close but unintentionally slightly off from 1:1 with reality). There isn’t as much artistic use of scale in VR video. A handful of exceptions can be found, for example, Apple’s recent 3D VR film “Submerged” has some close up shots of faces. I appreciated the attempt to do this, but to me, these shots felt a bit distorted and uncomfortable (I suspect the math wasn’t right for how they warped the images to achieve this effect).

Another interesting example of unusual stereo baseline in VR video is David Attenborough’s Micro Monsters, which created VR180 macroscopic video using a combination of custom optics and VFX.

At Lifecast, we explored another approach to creating macro scale VR experiences. We scanned mushrooms with an iPhone, then used our open source neural radiance field engine to reconstruct photorealistic 3D models. Then we rendered the models using ray tracing from the point of view of simulated fisheye cameras that are impossibly close together, to create the perception that the mushrooms are 4 feet tall in VR.

In UpscaleVideo.ai 1.09, we use a different approach that is suitable for video (not just static 3D models), and works with any stereoscopic input (VR180 or rectilinear). Here’s the secret sauce: we compute optical flow between the left and right images using RAFT (“Recurrent All-Pairs Field Transformer”). Then we warp the left and right images to generate an image from the point of view of a virtual camera. We construct the warp fields using forward 2D Gaussian splatting of texture coordinates, in order to handle occlusions with large displacement. This is already producing some interesting results, and we have a lot more ideas about how to generate images from virtual cameras that do impossible things in the works.